LLMs and Algorithms: The Key to Prescriptive Analytics

How product managers can harness prescriptive analytics to deliver innovative, customer-focused solutions.

As product managers, our role is not just to know what large language models (LLMs) can do but to understand how they work at a technical level. LLMs generate outputs based on probabilities derived from massive datasets, but their outputs are limited by inherent biases, numerical precision challenges, and dependency on structured inputs. While it may seem sufficient to blindly rely on their ability to answer complex questions with expert recommendations in seconds, deeper technical knowledge is crucial to develop intuitive products in this burgeoning space.

In this article, I explore how product managers can leverage the transformative potential of prescriptive analytics powered by large language models (LLMs) and pait them with optimization algorithms, understand their technical foundations and limitations, and apply real-world use cases and software tools to deliver intuitive, customer-focused solutions that drive market differentiation.

Image from Freepik

Product Managers Need to Know HOW

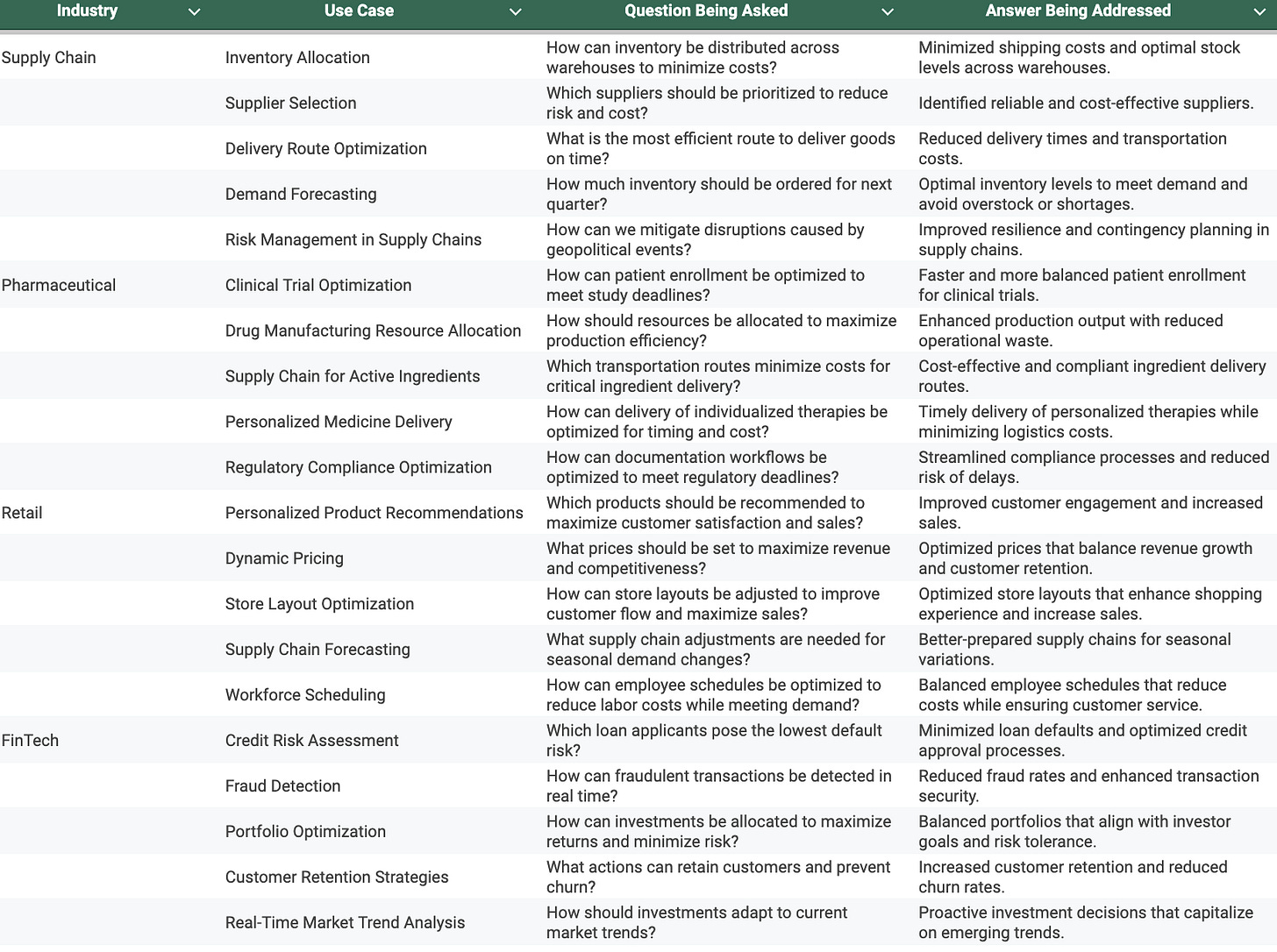

It’s not enough for product managers to know what LLMs can do. PMs must know how it works because change is the only constant in AI: augmentation, enhancements and learning are key to future development efforts. By way of example, I provide the below table which summarizes the end Questions and Answers possible with a fully realized LLM model for different market use cases.

This is great for Marketing the end solution, but how exactly do you get there? Ultimately, product managers serve as the bridge between technical capabilities, engineering development and user needs. By mastering how LLMs function, we can better align their potential with intuitive, customer-focused solutions, ensuring that our products deliver both innovation and usability.

Limitations of LLMs and the Need for Optimization Algorithms

While Large Language Models (LLMs) are transformative in generating human-readable insights, they come with notable limitations that require careful consideration.

1. LLMs Lack Numerical Precision

LLMs excel in interpreting and contextualizing data but are not designed for high-accuracy numerical calculations. For instance, while they can summarize statistical trends, their predictions may lack the precision required for complex optimization tasks like supply chain allocation or financial forecasting.

2. Algorithm Dependency

End solutions rely on pairing LLMs with optimization algorithms to deliver precise, actionable solutions. For example, when an LLM interprets a question like, "How can we optimize resource allocation?", it needs algorithms such as Linear Programming or Genetic Algorithms to compute the optimal answer based on quantitative constraints.

3. Complexity Management

Handling large-scale, multi-variable problems requires optimization algorithms to address scalability and resource limitations. LLMs alone cannot efficiently solve problems with intricate dependencies or constraints.

Why Pairing LLMs with Optimization Algorithms is Essential

Integrating LLMs with optimization algorithms combines the strengths of both systems. LLMs bring contextual reasoning and human-like communication, while optimization algorithms ensure numerical precision and scalability. Together, they create robust solutions that not only calculate the best outcomes but also provide clear, actionable recommendations tailored to complex business scenarios. This synergy is the key to unlocking the full potential of prescriptive analytics.

Popular Optimization Algorithms

Optimization algorithms are the backbone of prescriptive analytics, enabling businesses to solve complex problems with precision and scalability. Among the most popular are:

Linear Programming (LP): Ideal for problems with linear relationships, LP optimizes resource allocation under constraints, such as minimizing costs or maximizing efficiency.

Genetic Algorithms (GA): Inspired by natural selection, GA excels in solving non-linear and combinatorial problems, such as scheduling and feature selection.

Simulated Annealing: Mimicking the annealing process in metallurgy, this algorithm avoids local minima to identify optimal solutions for complex problems, such as route optimization.

These algorithms, along with others like Mixed-Integer Programming, Dynamic Programming, and Particle Swarm Optimization, are essential tools for addressing diverse industry challenges. The table below provides detailed insights into these and other algorithms, available software, their features, and real-world use cases. Product managers must familiarize themselves with these methods to better guide their engineering and program support teams in leveraging the right solutions for specific problems, ensuring efficient and innovative outcomes.

Integrating LLMs and Optimization Algorithms

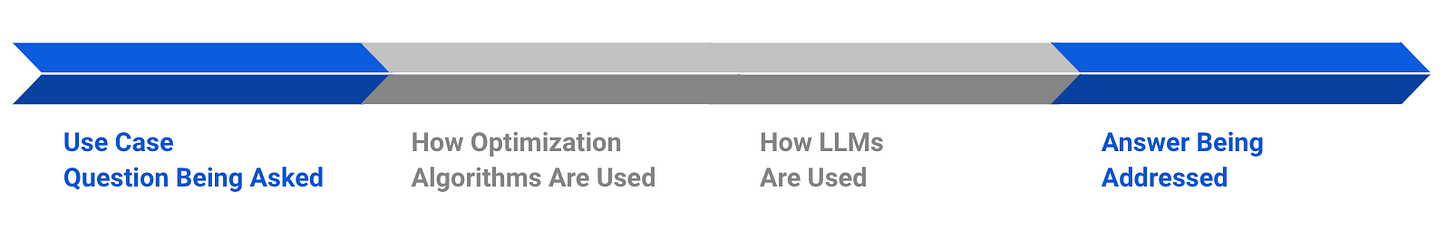

The Path from Question to Answer

The integration of Large Language Models (LLMs) and optimization algorithms bridges the gap between complex queries and actionable solutions. The workflow begins with LLMs interpreting high-level, natural language questions, such as “How can we optimize inventory across warehouses to reduce costs?” LLMs translate this into structured inputs, identifying objectives, constraints, and variables.

Optimization algorithms, such as those above like Linear Programming or Genetic Algorithms, then perform precise computations to generate the best solution under defined constraints. Once results are computed, LLMs convert the technical outputs into clear, human-readable recommendations, explaining trade-offs and strategic options.

The above table is now updated to include how these systems pair with optimization algorithms to move from high-level queries to actionable answers. Understanding this workflow allows product managers to ask the right questions, define realistic use cases, and set appropriate expectations for stakeholders.

Importance for Product Managers

Product managers must deeply understand this integration to align business goals with technical implementation. This knowledge ensures they can guide engineering teams in selecting the right algorithms and effectively communicate outcomes to stakeholders. By mastering this relationship, product managers enable their teams to unlock the full potential of prescriptive analytics, delivering impactful solutions that differentiate their products in competitive markets.

Example Use Case: Dynamic Inventory Allocation

Dynamic inventory allocation tackles the question: “How can inventory be distributed across warehouses to minimize costs?” This challenge involves balancing supply and demand across multiple locations while optimizing transportation and storage expenses.

How It Works: Linear Programming (LP) is used to compute the most efficient distribution by considering constraints like warehouse capacity, transportation costs, and delivery deadlines. The algorithm identifies the optimal inventory levels for each warehouse to minimize overall costs.

LLM Integration: Large Language Models (LLMs) play a critical role by translating the algorithm’s raw outputs into actionable recommendations. For example, LLMs provide context, explaining trade-offs such as prioritizing faster delivery in high-demand regions versus minimizing long-haul transportation costs.

Benefits: This integrated approach delivers tangible outcomes:

Reduced Costs: Optimized inventory reduces storage and transportation expenses.

Improved Logistics: Efficient allocation ensures timely delivery, preventing overstock or shortages.

Better Customer Satisfaction: Meeting demand accurately leads to faster service and fewer disruptions.

Dynamic inventory allocation showcases the power of combining LP and LLMs, driving strategic decisions that enhance both operational efficiency and customer experience.

Benefits of Pairing LLMs with Optimization Algorithms

The integration of Large Language Models (LLMs) with optimization algorithms unlocks significant advantages for businesses by combining contextual reasoning with computational precision.

1. User-Friendly Problem Definition

LLMs bridge the gap between technical systems and non-technical users by transforming complex questions into structured, machine-readable inputs. For instance, an executive can ask, “How do we optimize warehouse inventory?” and LLMs translate this into parameters for an optimization algorithm.

2. Enhanced Decision Support

Optimization algorithms compute precise solutions based on defined constraints, such as minimizing costs or maximizing efficiency. LLMs complement this by contextualizing outputs, explaining trade-offs, and presenting recommendations in an accessible manner.

3. Dynamic Refinement

Through continuous feedback loops, LLMs refine their understanding of inputs and adjust recommendations based on real-world results. This ensures that the solutions remain relevant and adaptive to changing conditions, such as fluctuating demand or resource constraints.

Together, LLMs and optimization algorithms provide a robust framework for solving complex problems, empowering businesses with actionable insights that are not only accurate but also user-friendly and adaptable. This pairing is essential for delivering impactful, scalable solutions in competitive markets.

Inspiration and Attribution

Image from Freepik

OpenAI API Tools

A Compact Guide to Large Language Models, Databricks

Mastering LLM Optimization: Key Strategies for Enhanced Performance and Efficiency, Ideas2IT

Mastering LLM Optimization With These 5 Essential Techniques, Attri, October 9, 2024