Building AI agents that mimic the key roles in a marketing department is now reality. In my last article, I presented how this can be done on a high level. In this article, I provide the detail, the playbook on how to collect and catalog every asset, transcribe audio with diarization, clean and tag the data and chunk and embed it into a vector database. This becomes the retrieval backbone for each of the role-based agents you will create and train later. On top of it sits an orchestration layer where each agent: SEO Manager, Copywriter, Analyst… whomever, has its own knowledge scope, style, and toolset. Guardrails ensure outputs are grounded and cite their sources. Deployment requires enterprise practices: RBAC, encryption, governance, and human review. The result is a set of digital colleagues that a head of marketing can call on individually or together to plan, write, optimize, and analyze.

This is the blueprint for teams exploring how GenAI moves from vision to reality.

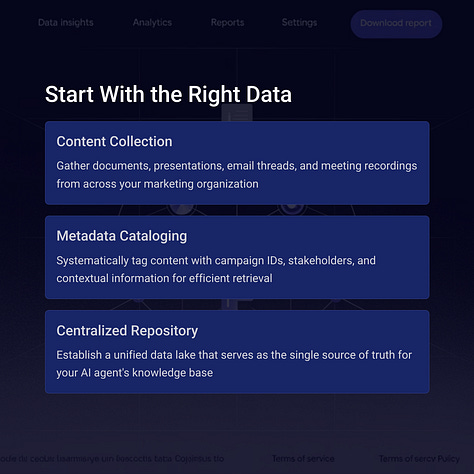

Data foundations

Everything starts with data, the raw material from every document, slide deck, email, calendar entry, campaign brief, and analytic report produced by the marketing team. Audio and video files add a second dimension: meeting discussions, brainstorming sessions, and client calls. These assets must be catalogued in a central repository with metadata such as source, author, and date. Without this inventory, downstream indexing and retrieval will collapse under gaps or inconsistencies.

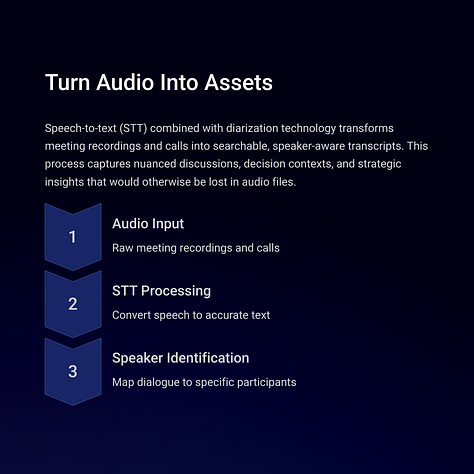

Transcription and structuring

Audio is only useful if it can be searched. Modern speech-to-text engines can transcribe meetings with diarization, separating speakers into distinct channels. A good transcript includes timestamps, speaker labels, and confidence scores. From there, summaries and action item extracts can be automatically generated. This turns unstructured conversation into structured, queryable artifacts and preserves attribution, so an agent trained on “SEO lead guidance” is drawing from the right voice.

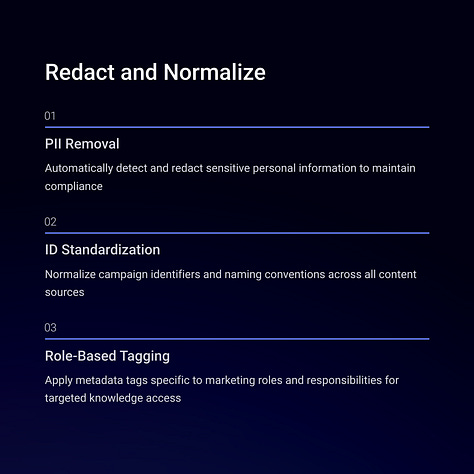

Data cleaning and privacy

Raw marketing data is messy. There’s a lot of meaningless junk in there. Names, emails, phone numbers, and customer identifiers are scattered throughout. Before indexing, these need to be redacted or pseudonymized. Automated PII detection combined with regex rules and named-entity recognition can cover most cases. Normalization is just as important: standardizing dates, campaign IDs, and product codes ensures consistent retrieval. At this stage, tags are added: channel, persona, campaign phase, so agents can filter their knowledge base by role-relevant material.

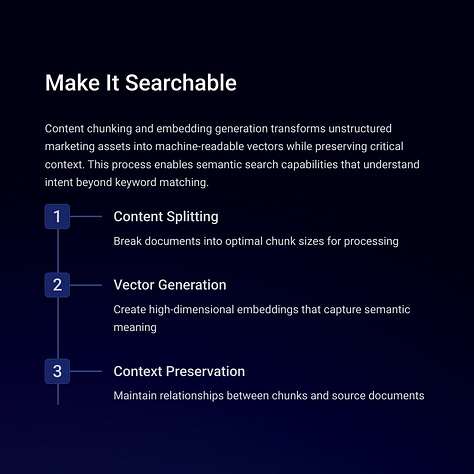

Chunking and embedding

I’ve written about this before. Large language models cannot consume gigabytes of text at once. Content is split into semantically coherent chunks: typically 500 to 1,500 tokens, while preserving logical boundaries like section headers or agenda items. Each chunk is paired with its metadata and then converted into a vector embedding using a high-quality embedding model. This transforms prose, slides, and transcripts into a mathematical form optimized for semantic similarity. Check out Botpress, a service that does this automatically, it’s pretty impressive.

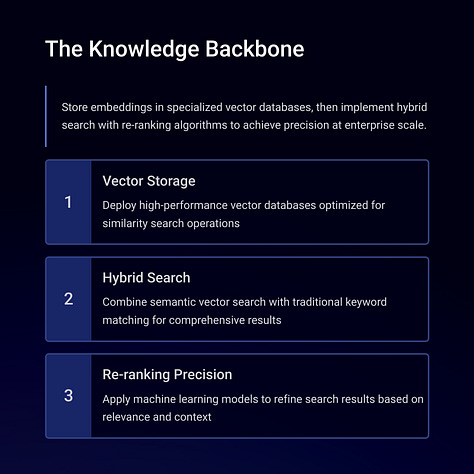

Vector indexing and retrieval

The embeddings are stored in a vector database such as Pinecone, Milvus, Qdrant, or Weaviate. Retrieval is hybrid: semantic similarity locates conceptually related chunks, while keyword search ensures precise matches on product names or campaign codes. Retrieval pipelines often include a re-ranking stage that orders results based on query relevance and freshness, boosting recent campaigns over archived material. This layer is the backbone of retrieval-augmented generation, supplying context that grounds each agent’s output.

Agent orchestration

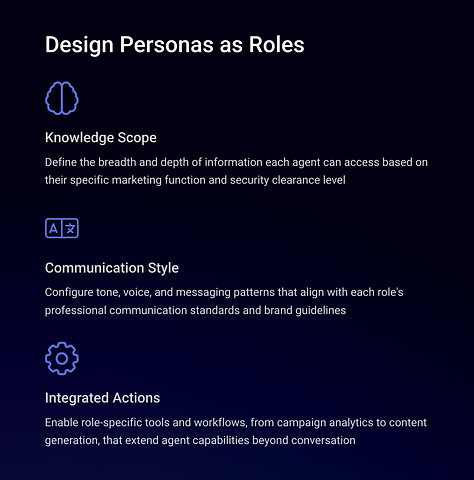

On top of retrieval sits the orchestration layer. Each agent is a persona defined by three elements:

Scope of knowledge — which indexes and metadata filters it can access.

Style and tone — captured in prompt templates or light instruction tuning.

Tools and actions — the ability to draft copy, fetch metrics, or propose keyword sets.

This is honestly my favorite step because in this step you’re largely defining the personality of your team, and as the “Head of Marketing” functions as dispatcher, routing tasks to the appropriate specialists. For example, a query to “update the Adwords campaign” is routed to the SEO Manager agent, which pulls keyword strategy documents and recent performance logs. A request for release notes goes to the Copywriter agent, which pulls from brand guidelines and feature briefs.

Guardrails and evaluation

Accuracy and safety require deliberate controls. Every generated output should cite its source chunks, allowing humans to verify. Low-confidence responses should default to “I don’t know” rather than guessing. Evaluation suites covering factual Q&A, multi-turn campaign scenarios, and creative drafts provide metrics for groundedness, readability, and latency. Human-in-the-loop review ensures that outputs remain aligned with brand and compliance requirements.

Deployment and governance

A production system must operate under enterprise standards. Access is managed with authentication and role-based permissions. Data is encrypted in transit and at rest. Monitoring tracks agent calls, retrieved chunks, and user feedback. Logs are maintained for auditability. (citations) Retention policies govern how long data remains indexed, and new assets are periodically re-ingested. Governance also covers privacy, documenting lawful basis for data use and running regular impact assessments.

Continuous learning

Agents improve when feedback is looped back into the system. Users can mark outputs as helpful, unhelpful, or incorrect. (thumbs up/thumbs down) Corrections can be captured and, with proper review, used for supervised fine-tuning or prompt adjustment. New campaigns and assets are indexed on a regular schedule, ensuring that the agents stay current without costly retraining.

Work is never done

Teams starting down this path should resist the urge to fine-tune models too early. Begin with retrieval-augmented generation and well-designed personas. This minimizes risk, maximizes freshness, and accelerates iteration. Fine-tuning becomes relevant once there is sufficient high-quality, role-specific conversational data to justify it. Above all, remember that the goal is not perfect mimicry but useful specialization: agents that act enough like their human counterparts to accelerate work, not replace judgment.

Tech Cheat Sheet

I mentioned a lot of roles and software in this article. Here’s a quick cheat sheet to build your team, tech stack and AI Pipeline process fast.

TLDR

Building AI agents that replicate a marketing department is not an act of science fiction. It is a disciplined engineering project. The pipeline: ingestion, transcription, cleaning, chunking, indexing, retrieval, and orchestration, all well within the capabilities of modern engineering and product teams. The result is a set of digital colleagues that a head of marketing can call on, one by one or together, to plan, write, optimize, and analyze.

Part 1 showed the vision. Part 2 shows the blueprint. The opportunity now is to build responsibly, balancing efficiency gains with privacy, accuracy, and human oversight. Done right, AI agents can transform not just how marketing teams work, but who counts as part of the team.